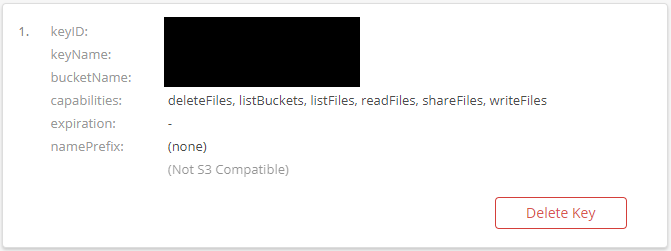

While waiting for the zip to be generated with the existing assets I updated ~/live/.env.production with the new values from the Scaleway bucket (as well as the new API credentials generated within Scaleway, so Mastodon can upload objects) ( More on this here). Since I'm a single private instance I could this step now. Mine was about 50Gb and took around 5 hours. It might take a few hours to generate depending on your bucket size, though. Create a snapshot of the bucketįrom B2 you can create a zip (snapshot), then you just download it. Want to know how I migrated? Here's how: 1. In hindsight, I should've known this when I signed up for Backblaze So the actual S3 Object Storage cost was being inflated by my bank's fees. My credit card is linked to an Spanish bank and they charge me a fee for every purchase I do in currencies other than EUR. Both providers have data centers in the EU ✅✅.Scaleway speed for deleting objects: faster ✅.Backblaze speed for deleting objects: slow ❌.Both providers are super cheap and high free tiers.

It's funny that all came down to a currency and payment issue (not pricing). I wanted to highlight why and how I did it, which is trivial when you know what to do Why? Now I'm moving away from Backblaze B2 as a S3 provider to Scaleway. I eventually moved all the assets to an S3 compatible Object Storage. Leverage this KB in order to move backup files.After starting to host my own private Mastodon instance it became clear that disk usage was going to be problem. Then, create SOBR with the Backup Repos where you have backups that you want to COPY/MOVE to BackBlaze, and you are good to go.

I understand that you do not want all your Backups to go to Object Storage, so for now the only way to achieve this would be to create more logical local repositories, meaning folders on your current Repository, and then create normal Backup Repositories and move there the Backups that you want to keep out of the cloud, and point the jobs to those new Repositories. In future releases, you would be able to select Object Storage directly as a Backup Repo, for Backups, or Backup Copy jobs, but meanwhile, yes you need to create a SOBR. In order to leverage Object Storage as a Backup Repository, you need to combine it with a Scale-out Backup Repository so you have different tiers, Performance Tier is your current Storage where your backups are, and then Capacity Tier (Object Storage), and there is one more which is Archive Tier but not important on this question.

The short answer is, that you are correct about what you have seen from BackBlaze. Welcome to the Forums! This is not a newbie question, no worries, it is quite common actually.

0 kommentar(er)

0 kommentar(er)